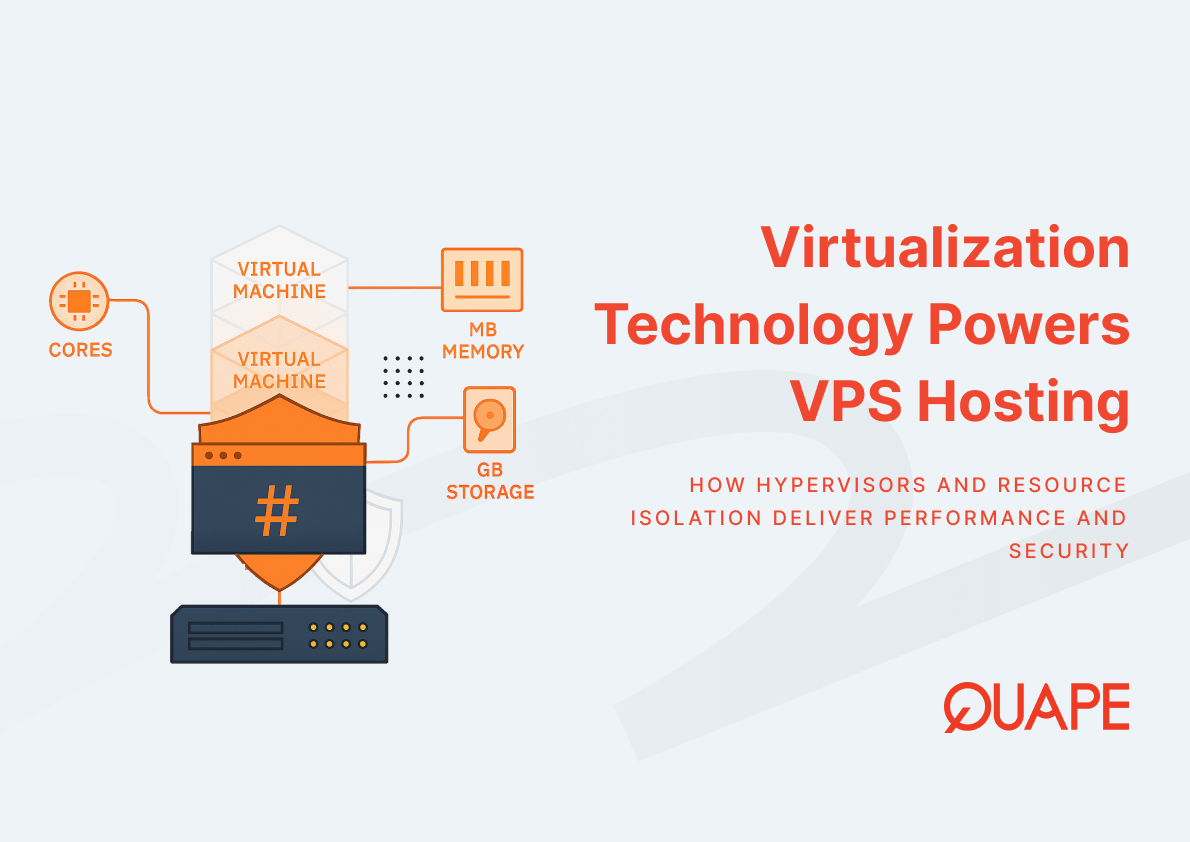

Virtualization technology transforms how businesses consume computing resources by enabling multiple isolated virtual machines to run on a single physical server. For IT managers, CTOs, and developers in Singapore’s fast-paced digital economy, understanding how virtualization powers Hosting VPS directly impacts infrastructure decisions, cost efficiency, and application performance. The global server virtualization market, estimated at USD 9.15 billion in 2024, is projected to reach USD 17.25 billion by 2033, reflecting continued enterprise adoption driven by consolidation needs and digital transformation. Modern hypervisors, namespace isolation, and intelligent resource management have evolved beyond simple partitioning into sophisticated systems that deliver near-native performance while maintaining strict security boundaries. This article examines the technical components that enable VPS hosting to provide dedicated resources, predictable performance, and operational flexibility for production workloads.

Virtualization technology creates abstraction layers between physical hardware and virtual machines, allowing a hypervisor to coordinate how CPU cycles, memory pages, network packets, and storage blocks are allocated to each VM. This abstraction enables hosting providers to consolidate workloads, improve hardware utilization from 15-20% to 70-80%, and deliver isolated environments that behave like dedicated servers while sharing underlying infrastructure.

Daftar isi

BeralihPoin-Poin Utama

- Hypervisors like KVM and Xen mediate access to physical resources, with KVM achieving over 1.2 million IOPS for 8 KB requests when paired with virtio-blk drivers

- Namespaces provide process, network, and filesystem isolation, while cgroups enforce CPU, memory, and I/O limits at the kernel level

- Virtual NICs abstract network hardware, enabling flexible network topologies and bandwidth allocation without physical cabling changes

- Block device abstraction allows hypervisors to present storage to VMs while optimizing caching, snapshots, and live migration capabilities

- Software-based NVMe virtualization with I/O queue passthrough eliminates most virtualization overhead, delivering near-native storage performance

- Hardware-assisted mechanisms like translation lookaside buffer (TLB) coherence reduce memory virtualization penalties by up to 30%

- In 2023, 66% of companies reported increased agility after adopting virtualization, with server consolidation reducing hardware costs by up to 50%

- VPS hosting in Singapore leverages these technologies to provide businesses with predictable performance, compliance-ready infrastructure, and rapid scaling

Introduction to Virtualization Technology in Modern VPS Hosting

Virtualization technology fundamentally changed IT infrastructure economics by decoupling software environments from physical hardware constraints. Traditional dedicated servers tied each application or website to specific machines, creating utilization inefficiencies and inflexible capacity planning. Virtualization introduces a software layer that presents standardized virtual hardware to operating systems, while the hypervisor transparently maps these virtual resources to physical components across one or many servers.

Modern VPS hosting builds on this foundation by providing isolated virtual machines with guaranteed resource allocations. Unlike shared hosting where processes compete unpredictably for CPU and memory, VPS hosting uses virtualization’s resource management capabilities to enforce boundaries that prevent one tenant from impacting another’s performance. For businesses operating in Singapore’s competitive digital landscape, this separation ensures that traffic spikes on neighboring VMs cannot degrade application response times or cause service disruptions.

The technical sophistication of current virtualization platforms extends beyond simple resource partitioning. Advanced hypervisors integrate with hardware virtualization extensions in modern CPUs, reducing the performance overhead that plagued early virtualization implementations. Namespace isolation prevents processes in one VM from viewing or affecting processes in others, while control group (cgroup) mechanisms provide fine-grained enforcement of resource limits at the kernel level. Virtual network interface cards (NICs) create flexible networking topologies without physical constraints, and block device abstraction enables sophisticated storage features like snapshots and live migration without requiring VMs to understand the underlying storage architecture.

Key Components and Concepts of Virtualization Technology

Hypervisors: KVM, Xen, and Their Role

Hypervisors serve as the control plane that schedules virtual machine execution on physical processors, manages memory allocation, and coordinates I/O operations between VMs and hardware. KVM (Kernel-based Virtual Machine) integrates directly into the Linux kernel, transforming it into a Type-1 hypervisor that leverages kernel infrastructure for scheduling and memory management. This integration enables KVM to handle more than 1.2 million IOPS for 8 KB I/O requests when configured with virtio-blk paravirtualized drivers, approaching bare-metal storage performance for demanding database and application workloads.

Xen employs a different architectural approach, using a minimal hypervisor layer that runs directly on hardware with a privileged domain (dom0) handling device drivers and management tasks. This microkernel design reduces the hypervisor’s attack surface and allows specialized domains to manage specific hardware functions. Both KVM and Xen support hardware virtualization extensions like Intel VT-x and AMD-V, which enable processors to execute guest operating system instructions directly rather than requiring software emulation or binary translation.

The choice between hypervisors affects performance isolation, resource overhead, and operational characteristics under contention scenarios. When multiple VMs compete for resources, different hypervisors exhibit varying degrees of performance predictability depending on their scheduling algorithms and I/O arbitration logic. For businesses evaluating fully managed versus self-managed VPS options, understanding these hypervisor differences helps inform decisions about performance expectations and troubleshooting complexity.

Namespaces and Resource Isolation

Namespaces provide isolation boundaries that prevent processes within one virtual machine from observing or interacting with processes, network interfaces, or filesystem mounts in other VMs. The Linux kernel implements several namespace types that collectively create an isolated execution environment: PID namespaces separate process ID spaces so each VM maintains its own init process and process tree, mount namespaces provide independent filesystem hierarchies, and network namespaces create isolated network stacks with separate routing tables and firewall rules.

This isolation operates at the kernel level, making it both efficient and secure. When a process in one VM attempts to enumerate running processes or network connections, the kernel filters results to show only resources within that VM’s namespace. This prevents information leakage between tenants and ensures that a compromised VM cannot directly observe activity in neighboring environments. UTS namespaces allow each VM to have independent hostname and domain name settings, while IPC namespaces isolate inter-process communication primitives like message queues and shared memory segments.

The security implications extend beyond simple process separation. By isolating network namespaces, virtualization prevents one VM from placing its network interface into promiscuous mode to capture traffic from other VMs on the same physical network interface. Mount namespaces ensure that even if a VM gains root access, it cannot mount or access filesystem resources allocated to other tenants. This multi-layered isolation creates defense in depth that protects hosting providers and their customers from both accidental interference and malicious activity.

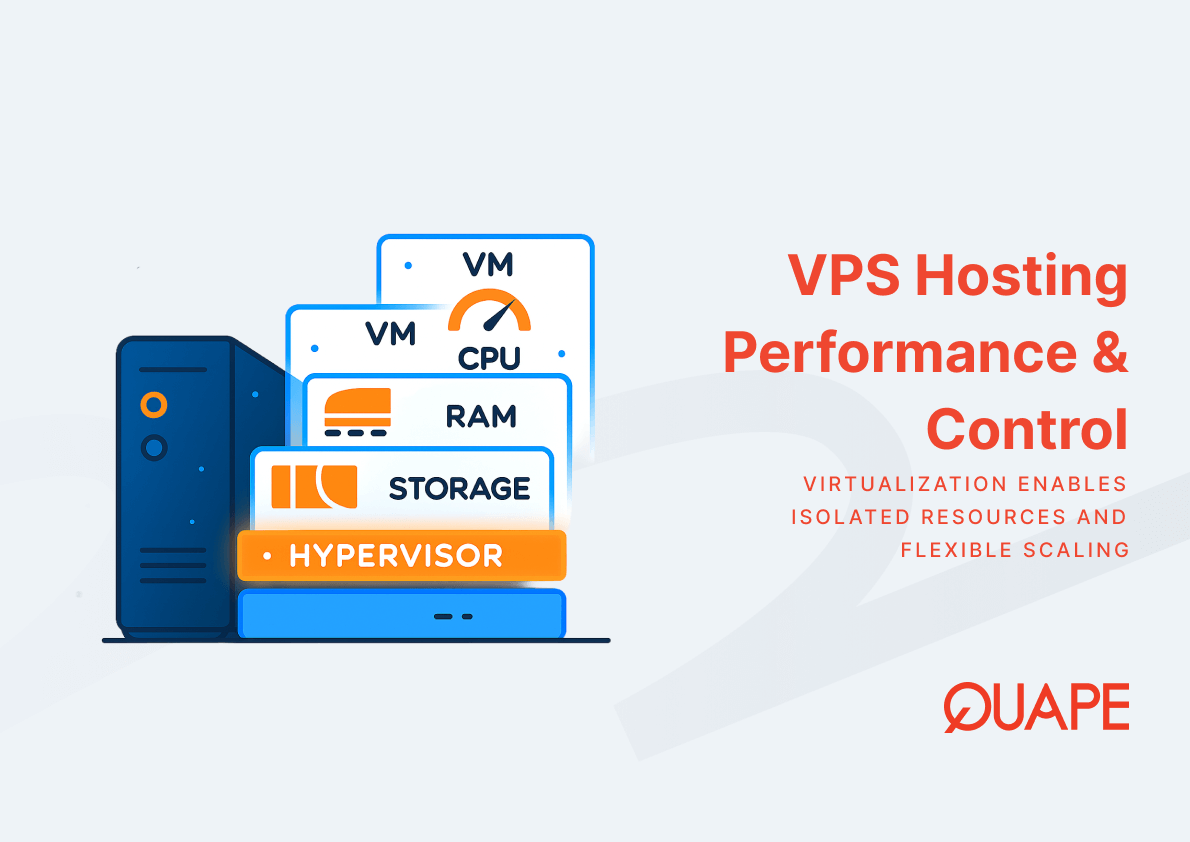

Cgroups for Resource Management

Control groups (cgroups) enforce resource limits and track resource usage at the kernel level, preventing any single VM from monopolizing CPU time, memory capacity, or I/O bandwidth. Unlike simple nice values that provide relative CPU priority, cgroups allow administrators to specify hard limits: a VM allocated 4 vCPUs receives exactly that much processing capacity, regardless of load on other VMs sharing the physical server. Memory cgroups prevent VMs from exceeding their allocated RAM, triggering out-of-memory handlers within the VM rather than affecting other tenants.

Block I/O cgroups control how much disk throughput and IOPS each VM can consume, preventing scenarios where one tenant running a backup operation saturates storage controllers and degrades database performance for other users. Network cgroups implement bandwidth limits and traffic shaping at the virtual interface level, ensuring that each VM receives its guaranteed network capacity. These mechanisms work together to create predictable performance profiles that behave like dedicated servers, even when dozens of VMs share physical hardware.

The hierarchical structure of cgroups enables sophisticated resource allocation strategies. Hosting providers can create parent cgroups for different service tiers, then nest individual VMs within those tiers to inherit appropriate limits. This allows dynamic reallocation when physical servers are under-utilized while maintaining guaranteed minimums during peak contention. For applications requiring consistent latency, such as real-time APIs or e-commerce platforms, cgroup enforcement prevents the performance variability that plagues shared hosting environments.

Virtual NICs and Network Abstraction

Virtual network interface cards present standard network devices to virtual machines while the hypervisor manages how packets flow between VMs, physical network interfaces, and external networks. Each VM receives one or more virtual NICs that appear as standard Ethernet adapters to the guest operating system, complete with MAC addresses and standard driver interfaces. Behind this abstraction, the hypervisor implements virtual switches that forward packets based on MAC addresses, VLAN tags, or more sophisticated software-defined networking rules.

This abstraction enables flexible network topologies that would be impossible or expensive with physical hardware. VMs can be migrated between physical hosts without changing IP addresses because the virtual network follows the VM. Private networks can isolate groups of VMs for security purposes without requiring separate physical switches. Bandwidth allocation and quality-of-service policies can be adjusted in software rather than requiring hardware reconfiguration, and network performance optimizations can be applied at the virtual switch level to reduce latency for specific application traffic.

Paravirtualized network drivers like virtio-net significantly improve throughput and reduce CPU overhead compared to fully emulated NICs. By eliminating unnecessary hardware emulation and allowing the guest OS to communicate directly with the hypervisor’s network stack, virtio-net achieves multi-gigabit throughput with minimal processor consumption. For bandwidth-intensive applications like video streaming, API gateways, or content delivery, this efficiency translates directly into lower infrastructure costs and better end-user experience.

Block Device Abstraction and Storage Efficiency

Block device abstraction separates how virtual machines interact with storage from the underlying physical storage architecture, allowing hypervisors to present virtual disks backed by local SSDs, network-attached storage, or distributed storage systems. A VM sees a standard block device (like /dev/vda in Linux), but the hypervisor may store that virtual disk as a file, a logical volume, or distribute it across multiple physical devices for redundancy. This abstraction enables features like snapshots, thin provisioning, and live migration that would be impossible if VMs accessed physical storage directly.

Modern storage virtualization incorporates intelligent caching strategies that adapt to workload behavior. Research on caching schemes for virtualized platforms shows that dynamically partitioning cache space based on each VM’s I/O patterns improves both performance-per-cost metrics and SSD endurance by reducing unnecessary write amplification. These optimizations operate transparently to the VM, which continues to see a simple block device while benefiting from sophisticated read-ahead algorithms, write coalescing, and cache eviction policies tuned for virtualized environments.

The emergence of NVMe storage with its low-latency command queues and parallel I/O capabilities prompted new virtualization approaches. Software-based NVMe virtualization using I/O queue passthrough techniques achieves near-native performance by allowing VMs to submit I/O commands directly to hardware queues with minimal hypervisor intervention. This architectural shift eliminates much of the virtualization penalty that previously made shared storage unsuitable for latency-sensitive databases and applications, as detailed in our analysis of NVMe VPS hosting performance benefits. For businesses running transaction processing systems or real-time analytics, this performance parity between virtualized and bare-metal storage removes a significant infrastructure constraint.

Aplikasi Praktis untuk Bisnis Singapura

Singapore’s position as a digital hub and financial center creates unique infrastructure requirements where virtualization technology directly addresses regulatory, performance, and operational needs. Businesses subject to Singapore’s data sovereignty requirements leverage VPS hosting in Singapore to ensure that customer data remains within jurisdictional boundaries while maintaining the operational flexibility of cloud infrastructure. Virtualization’s namespace isolation and resource partitioning provide compliance-ready separation without requiring dedicated physical servers for each application or data classification level.

Financial services firms, e-commerce platforms, and SaaS providers operating in Singapore benefit from virtualization’s ability to deliver predictable performance for customer-facing systems. The combination of cgroup resource enforcement and hypervisor scheduling guarantees that high-traffic websites receive consistent CPU cycles and network bandwidth during peak periods, preventing the revenue impact of slow page loads or API timeouts. When local competitors respond within 200ms while your application takes 2 seconds, virtualization’s performance isolation becomes a competitive advantage rather than just an infrastructure detail.

Development teams use virtualization to create production-identical staging environments without duplicating physical infrastructure. Because hypervisors abstract hardware, developers can spin up VMs with the exact CPU, memory, and storage configuration of production systems, then tear them down when testing completes. This environment parity reduces the “works on my machine” failures that plague deployments, while virtualization’s snapshot capabilities allow teams to preserve specific configuration states for regression testing or incident reproduction. Understanding Singapore’s compliance landscape helps teams configure these environments with appropriate data handling and access controls from the start.

How VPS Hosting Supports and Enhances Virtualization Technology

VPS hosting productizes virtualization technology by packaging hypervisor capabilities, resource guarantees, and operational tools into service tiers that businesses can consume without managing physical infrastructure. Each VPS plan allocates specific quantities of vCPU, memory, NVMe storage, and network bandwidth, with the underlying virtualization stack enforcing these allocations through cgroups and hypervisor scheduling policies. This transforms abstract virtualization concepts into concrete infrastructure that teams can deploy, scale, and manage through APIs or control panels.

The cloud architecture underlying modern VPS hosting platforms extends basic virtualization with distributed storage, automated failover, and orchestration systems that handle common operational tasks. When a physical host fails, automated systems detect the failure, restart affected VMs on healthy hardware, and update network routing without requiring manual intervention. Live migration capabilities allow VPS instances to move between physical servers during maintenance windows without downtime, a feature impossible without virtualization’s hardware abstraction. Snapshot and backup systems leverage block device abstraction to capture VM state without interrupting running applications.

Performance advantages compound when VPS hosting combines current-generation processors, NVMe storage arrays, and optimized hypervisor configurations. KVM’s integration with Linux kernel features like huge pages and NUMA awareness reduces memory virtualization overhead, while virtio drivers minimize the CPU cost of I/O operations. For compute-intensive workloads like data analytics or application compilation, these optimizations mean that 8 vCPUs in a properly configured VPS deliver throughput comparable to 8 physical cores. Storage performance benefits similarly from NVMe virtualization techniques that achieve near-native IOPS and latency, making virtualized infrastructure viable for database workloads that previously required dedicated hardware.

Kesimpulan

Virtualization technology has matured from a resource-sharing mechanism into a sophisticated platform that combines performance, security, and operational flexibility for production workloads. The technical components working together in modern VPS hosting, from KVM’s high-performance hypervisor to namespace isolation and intelligent storage abstraction, create infrastructure that behaves like dedicated servers while delivering the cost efficiency and agility of shared resources. For businesses in Singapore navigating digital transformation, regulatory compliance, and competitive pressure to deliver faster applications, understanding these virtualization fundamentals informs better infrastructure decisions and more effective resource planning.

Ready to deploy VPS infrastructure built on enterprise-grade virtualization? Hubungi tim penjualan kami to discuss how QUAPE’s KVM-based VPS hosting delivers the performance isolation and operational capabilities your applications require.

Pertanyaan yang Sering Diajukan (FAQ)

What is the difference between Type-1 and Type-2 hypervisors in VPS hosting?

Type-1 hypervisors like KVM and Xen run directly on physical hardware with minimal overhead, making them suitable for production VPS hosting where performance matters. Type-2 hypervisors run on top of a host operating system (like VMware Workstation on Windows), adding an extra software layer that increases latency and reduces efficiency. Production VPS platforms universally use Type-1 hypervisors to maximize resource utilization and minimize virtualization penalties.

How does virtualization isolation protect my VPS from other tenants?

Namespace isolation prevents processes in your VPS from seeing or interacting with processes in other VMs, while cgroups enforce hard limits on CPU, memory, and I/O that prevent resource exhaustion attacks. The hypervisor mediates all hardware access, so even if another tenant’s VM is compromised, kernel-level boundaries prevent lateral movement to your environment. This multi-layered approach provides security comparable to physically separate servers.

Can virtualization performance match dedicated servers for database workloads?

Modern virtualization with paravirtualized drivers and NVMe passthrough achieves near-native performance for most database workloads. KVM with virtio-blk can deliver over 1.2 million IOPS, while hardware-assisted memory management reduces virtualization overhead to negligible levels. For the majority of transactional databases and data warehouses, properly configured VPS infrastructure eliminates the performance gap that historically favored dedicated servers, with the added benefit of snapshot capabilities and live migration.

What happens to my VPS if the physical server fails?

High-availability VPS platforms use distributed storage and automated failover systems that detect hardware failures and restart affected VMs on healthy servers within minutes. Because your VM’s virtual disks are stored on redundant storage systems rather than local drives, the hypervisor can boot your VPS on any compatible physical host. This recovery capability, impossible without virtualization’s hardware abstraction, provides faster restoration than replacing failed components in dedicated servers.

How do I choose between different hypervisors for VPS hosting?

For most business applications, the hypervisor choice matters less than the hosting provider’s implementation quality and hardware selection. KVM’s kernel integration and broad hardware support make it the dominant choice for Linux-based VPS hosting, while Xen’s microkernel architecture appeals to organizations prioritizing security isolation. Focus on the provider’s track record for performance consistency, their SLA commitments, and whether they use current-generation processors and NVMe storage rather than the specific hypervisor brand.

Does virtualization overhead significantly impact application performance?

Modern virtualization overhead is typically 2-5% for CPU-bound workloads when using hardware virtualization extensions and paravirtualized drivers. For I/O operations, virtio drivers and NVMe passthrough reduce storage overhead to near zero, while virtual networking achieves multi-gigabit throughput with minimal CPU consumption. The resource guarantees provided by virtualization often deliver more consistent performance than bare-metal servers running multiple applications that compete for resources without isolation.

Can I run containers inside a VPS, and is there a performance penalty?

Yes, containers run efficiently inside VPS environments because both technologies use overlapping kernel features like namespaces and cgroups. Running Docker or Kubernetes inside a VPS adds minimal overhead beyond the base virtualization layer, giving you the isolation benefits of VMs with the deployment flexibility of containers. This nested approach is common for development teams who want VPS-level resource guarantees while using containers for application packaging and orchestration.

What virtualization features enable zero-downtime VPS maintenance?

Live migration allows running VMs to move between physical hosts while maintaining network connections and application state. The hypervisor copies memory pages to the destination server while the VM continues executing, then performs a brief pause (typically under 1 second) to transfer the final state before resuming on the new host. This capability, combined with distributed storage that both hosts can access, enables hosting providers to perform hardware maintenance without scheduling downtime windows for customer applications.

- VPS Network Performance & Latency Optimization - Desember 12, 2025

- How to Choose RAM & CPU for Your VPS - Desember 12, 2025

- NVMe VPS Hosting: Why Ultra-Fast Storage Makes a Massive Performance Difference - Desember 11, 2025