High-traffic websites demand infrastructure that can maintain consistent performance under load while controlling operational costs. Virtual Private Server hosting delivers isolated compute resources, elastic scaling options, and intelligent traffic distribution without requiring dedicated hardware investment. For IT managers and CTOs operating in Singapore’s competitive digital market, VPS hosting provides the foundation for handling concurrent user requests, managing peak traffic periods, and maintaining service availability across distributed user bases. As global data center electricity consumption rises, projected to reach approximately 945 TWh by 2030 according to the International Energy Agency, efficient resource allocation through VPS infrastructure becomes both a performance requirement and an operational necessity.

Mục lục

Chuyển đổiWhat VPS Hosting Means for High-Traffic Operations

VPS hosting allocates dedicated CPU cores, memory, and storage to individual virtual machines while sharing underlying physical infrastructure. This model separates your application environment from neighboring tenants through hypervisor-level isolation. Unlike shared hosting where resources fluctuate based on other sites’ activity, VPS hosting guarantees baseline performance through reserved capacity. For high-traffic scenarios, this isolation prevents resource contention during concurrent request spikes, enabling predictable response times even when neighboring VMs experience load variations.

Những điểm chính

- Horizontal scaling adds VPS instances to distribute traffic, but requires effective load balancing to translate capacity into actual performance gains

- Load balancing strategy significantly impacts tail response time (worst-case latency), not just average response metrics

- Caching layers reduce repetitive compute operations and database queries, decreasing backend load and allowing fewer instances to serve more traffic

- Dynamic auto-scaling adjusts active instances based on real-time demand, minimizing idle server energy costs while maintaining responsiveness

- NVMe storage on VPS infrastructure accelerates disk I/O operations that often bottleneck high-concurrency applications

- Singapore’s regional positioning reduces latency for APAC audiences while supporting data sovereignty requirements for regulated industries

- Energy efficiency in scaling strategies becomes critical as data center power consumption accounts for approximately 21% of metered electricity in markets like Ireland

Key Components of VPS Hosting for High Traffic

Horizontal Scaling for Growing Traffic

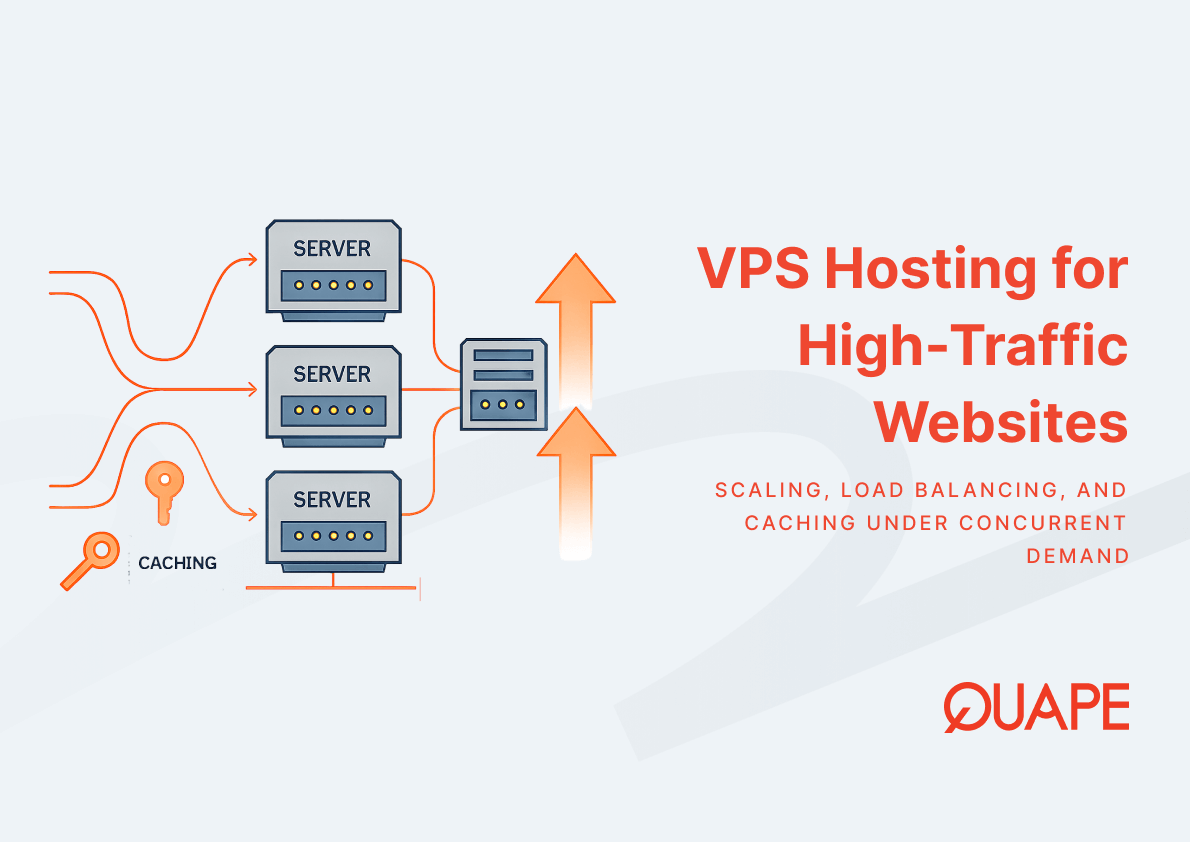

Horizontal scaling distributes incoming traffic across multiple VPS instances rather than upgrading a single server’s specifications. When you add instances to handle increased load, each VPS processes a subset of total requests, preventing any single point of capacity exhaustion. Research demonstrates that horizontal scaling improves both average and tail response times in data center servers, though the performance gains don’t scale linearly with added resources. The relationship between instance count and throughput depends on how effectively your application handles distributed state, session management, and inter-instance communication.

This approach connects directly to virtualization technology that enables rapid VM provisioning, allowing infrastructure teams to deploy additional capacity within minutes rather than procuring physical hardware. For businesses projecting growth, understanding how VPS scaling costs accumulate helps balance performance requirements against budget constraints. The VPS market reflects this scaling demand, with industry analysts projecting growth from approximately USD 5.1 billion in 2024 to USD 14.1 billion by 2033, representing a compound annual growth rate near 12% according to IMARC Group market analysis.

Load Balancing to Optimize Requests

Load balancing determines which VPS instance receives each incoming request, distributing traffic to prevent individual servers from becoming overwhelmed while others sit underutilized. The balancing algorithm you select affects not just average performance but specifically tail latency, which represents the worst-case response time users experience. Studies indicate that load-balancing strategy has a significant impact on tail response time when applications are horizontally scaled, meaning two identical VPS clusters can deliver markedly different user experiences based solely on distribution logic.

Simple round-robin distribution cycles through available instances sequentially, while weighted algorithms direct more traffic to higher-capacity VPS nodes. More sophisticated approaches like least-connection routing send requests to whichever instance currently handles the fewest active sessions. For mission-critical applications, failover mechanisms detect unresponsive instances and reroute traffic automatically, maintaining uptime even when individual VPS nodes experience issues. This traffic management connects with network performance optimization strategies that reduce latency between load balancers and backend instances.

Advanced implementations use dynamic load balancing that adapts in real time to changing traffic patterns. Research shows that dynamic or adaptive load-balancing can reduce energy use, improve resource utilization, and adapt to changes in demand across virtual machines. This capability becomes particularly valuable during traffic spikes, where static distribution rules may concentrate load inefficiently.

Managing Request Concurrency Efficiently

Request concurrency refers to how many simultaneous connections your VPS infrastructure can process before queuing or dropping requests. Each incoming HTTP request consumes server threads, memory buffers, and CPU cycles until the response completes. High-traffic websites must configure web servers, application runtimes, and database connection pools to handle concurrent load without exhausting available threads or creating memory pressure.

Web server software like Nginx uses event-driven architecture to handle thousands of concurrent connections within a single process, while application servers often spawn worker processes or threads for parallel request processing. The VPS specifications you select must provide sufficient CPU cores and memory to support your expected concurrency level. A VPS with 4 cores may efficiently handle 200 concurrent PHP-FPM workers, but attempting to run 500 workers would create CPU contention and degrade response times across all requests.

Database connection pooling limits how many concurrent queries reach your database server, preventing connection exhaustion under load. Applications should reuse pooled connections rather than establishing new database connections for each request. When properly configured, connection pooling reduces connection overhead and allows your database VPS to serve more concurrent traffic with the same hardware resources.

Caching Layers for Faster Content Delivery

Caching stores frequently accessed data in fast-retrieval locations, eliminating repetitive database queries and compute operations. For high-traffic sites, caching layers dramatically reduce backend load, enabling fewer VPS instances to serve substantially more users. Memory-based caches like Redis or Memcached retrieve data in microseconds compared to milliseconds for database queries, improving response times while decreasing CPU utilization on application servers.

Multiple caching tiers work together: opcode caches store compiled PHP bytecode in memory, object caches hold database query results, and full-page caches serve complete HTML responses without executing application code. Each tier intercepts requests at a different point in the request lifecycle. The closer to the user your cache operates, the less infrastructure load each request generates. Advanced caching strategies, especially at the edge, help reduce load on origin servers, which allows you to handle more traffic with fewer backend VPS instances.

The effectiveness of caching depends on your content’s access patterns. Content requested frequently by many users (product pages, article archives) benefits tremendously from caching, while unique per-user content (account dashboards, shopping carts) requires more selective caching strategies. Storage performance affects cache efficiency: NVMe storage on VPS infrastructure accelerates disk-based caching operations, reducing the performance gap between memory and persistent storage layers.

Practical Applications for High-Traffic Websites in Singapore

Singapore serves as a strategic location for VPS hosting targeting APAC audiences, combining low regional latency with robust telecommunications infrastructure. E-commerce platforms operating during regional peak hours benefit from Singapore’s positioning as a hosting hub that minimizes round-trip times to major Southeast Asian cities. Financial services and healthcare applications must often comply with data sovereignty requirements that mandate customer data remain within specific jurisdictions, making Singapore-hosted VPS solutions essential for regulated industries.

SMEs scaling digital services face traffic patterns that fluctuate throughout the day, with evening hours typically generating peak load. VPS hosting allows these organizations to add capacity for peak periods without maintaining oversized infrastructure during off-hours. E-commerce applications particularly benefit from this elasticity during promotional campaigns, when traffic may spike 5x to 10x above baseline levels.

API services supporting mobile applications or third-party integrations require consistent response times regardless of request volume. A VPS-based API gateway can scale horizontally behind load balancers, ensuring that authentication, rate limiting, and request routing remain responsive even when backend services experience temporary slowdowns. For development teams building microservices architectures, each service can run on appropriately sized VPS instances, optimizing resource allocation across the application stack.

How VPS Hosting Supports and Improves Website Performance

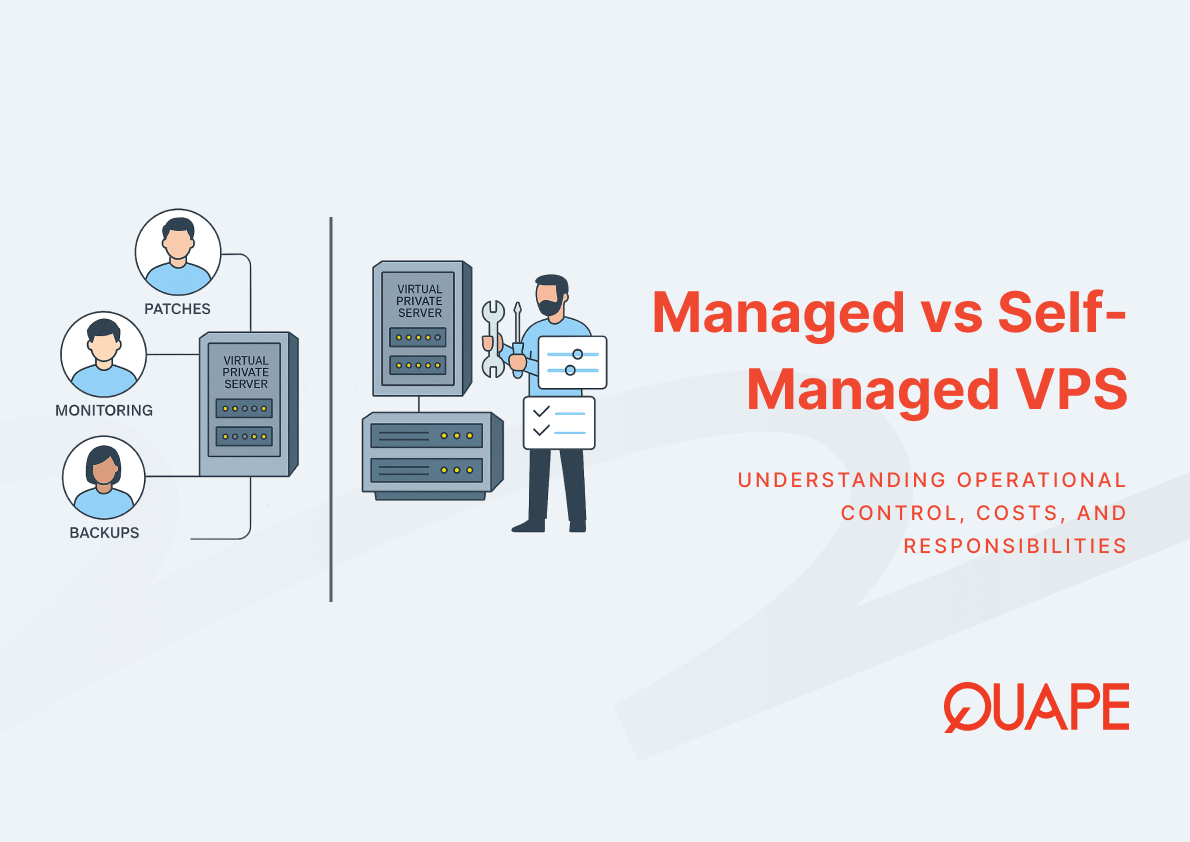

VPS hosting from providers like QUAPE delivers dedicated CPU cores, guaranteed memory allocation, and isolated storage that eliminate the resource contention inherent in shared hosting. Daily automated backups protect against data loss without manual intervention, while SSL certificate integration secures data transmission between users and servers. Operating system flexibility allows infrastructure teams to select Ubuntu, Debian, AlmaLinux, or Windows environments based on application requirements.

Resource dedication directly impacts application performance under load. When traffic spikes arrive, your VPS maintains consistent CPU and memory access because neighboring VMs cannot consume your allocated resources. This isolation proves particularly valuable for database workloads where consistent disk I/O performance affects query response times. Understanding how to select appropriate RAM and CPU specifications ensures your VPS configuration matches your application’s resource consumption patterns.

Security isolation in VPS environments prevents vulnerabilities in neighboring applications from affecting your infrastructure. Implementing cybersecurity best practices at the VPS level, including firewall configuration, port management, and intrusion detection, creates defense layers that protect against common attack vectors. For teams lacking in-house DevOps resources, fully managed VPS options handle system administration tasks while maintaining customization flexibility.

Disaster recovery planning becomes more straightforward with VPS infrastructure because virtual machines can be snapshotted, replicated, and restored rapidly. Where dedicated server hardware failures might require hours for replacement and restoration, VPS environments typically restore from snapshots within minutes. This rapid recovery time objective (RTO) significantly reduces potential revenue loss during outages.

Research indicates that joint auto-scaling and load balancing, implemented without centralized queueing, can asymptotically minimize both delay and idle-server energy wastage in large systems. This optimization becomes increasingly important as data center energy consumption grows. The balance between performance and energy efficiency isn’t just an environmental consideration; it affects operational costs as electricity consumption in data centers continues rising, with Irish data centers accounting for approximately 21% of metered electricity consumption according to national statistics.

Strategic Infrastructure for Growing Traffic Demands

VPS hosting provides the technical foundation for handling concurrent user requests while maintaining cost efficiency through elastic scaling. The architectural components work together: horizontal scaling distributes load, intelligent load balancing optimizes distribution, caching reduces unnecessary compute operations, and dedicated resources ensure consistent performance. For organizations operating in Singapore’s digital economy, VPS infrastructure delivers regional latency advantages while supporting compliance with data localization requirements.

Effective VPS implementation requires understanding how these components interact with your specific application architecture and traffic patterns. Contact our sales team at https://www.quape.com/contact-us/ to discuss VPS configurations matched to your high-traffic requirements.

Câu Hỏi Thường Gặp

When should I scale horizontally versus vertically for high traffic?

Horizontal scaling (adding more VPS instances) works best when your application supports distributed operation and traffic increases significantly. Vertical scaling (upgrading individual VPS resources) suits applications with single-instance dependencies or moderate traffic growth. Many implementations combine both approaches for optimal flexibility.

How does load balancing improve performance beyond just distributing requests?

Effective load balancing reduces tail latency by preventing individual instances from becoming overwhelmed while others remain underutilized. Health checks detect failing instances and reroute traffic automatically, maintaining availability. Advanced algorithms can balance not just request count but actual resource utilization across instances.

What caching strategy provides the best performance improvement?

Multi-tier caching delivers the strongest results: opcode caching accelerates code execution, object caching reduces database load, and page caching eliminates application processing entirely for static content. The optimal strategy depends on your application’s data access patterns and update frequency.

Why does Singapore location matter for high-traffic websites?

Singapore provides low-latency connectivity to major APAC population centers, typically achieving 30-80ms response times to regional users compared to 150-300ms from US or European datacenters. For industries requiring data sovereignty compliance, Singapore hosting ensures customer data remains within regional jurisdiction.

How much traffic can a single VPS instance handle?

Traffic capacity depends on your application’s resource consumption per request. A well-optimized WordPress site might serve 50-100 concurrent users per vCPU, while a complex e-commerce platform might handle 10-20. Load testing your specific application provides accurate capacity planning data.

What’s the relationship between VPS specifications and concurrent connections?

CPU cores determine parallel request processing capability, while memory affects how many concurrent connections you can maintain. A 4-core VPS with 8GB RAM typically handles 200-400 concurrent connections efficiently, but connection pooling and application optimization significantly influence these limits.

Should I implement auto-scaling for variable traffic patterns?

Auto-scaling reduces costs during low-traffic periods while maintaining performance during spikes. Implementation requires monitoring infrastructure, defining scaling thresholds, and ensuring your application handles dynamic instance addition gracefully. The complexity investment pays off when traffic variability exceeds 3x between peak and off-peak periods.

How does VPS energy efficiency affect operational costs?

As data center electricity costs rise, efficient resource utilization through auto-scaling and load optimization reduces both environmental impact and operational expenses. Providers increasingly factor power consumption into pricing, making energy-efficient scaling strategies financially beneficial beyond environmental considerations.

- VPS Network Performance & Latency Optimization - Tháng 12 12, 2025

- How to Choose RAM & CPU for Your VPS - Tháng 12 12, 2025

- NVMe VPS Hosting: Why Ultra-Fast Storage Makes a Massive Performance Difference - Tháng 12 11, 2025