If your business depends on fast page loads, high database throughput, or handling concurrent user requests, the storage protocol powering your VPS hosting infrastructure directly impacts revenue, customer experience, and operational efficiency. NVMe-based VPS hosting replaces the bottlenecks of legacy SATA storage with PCIe-connected drives that support massively parallel I/O operations, delivering 5 to 8 times better performance in real-world workloads. For IT managers and CTOs evaluating hosting solutions in Singapore, understanding how NVMe architecture enables higher IOPS, lower latency, and improved resource utilization helps you select infrastructure that supports current demands while accommodating future growth in data-intensive applications.

NVMe VPS hosting refers to virtual private server environments that use Non-Volatile Memory Express storage drives connected via PCIe lanes rather than the older SATA interface. This architectural shift allows each storage device to communicate directly with the CPU through high-bandwidth PCIe channels, bypassing the protocol overhead and queuing limitations inherent in SATA-based systems. The result is storage that responds faster to read and write requests, handles more simultaneous operations, and delivers consistent performance under heavy workloads.

Key Takeaways

- NVMe uses PCIe lanes for direct CPU communication, eliminating SATA’s bandwidth and latency bottlenecks while supporting up to 65,535 I/O queues compared to SATA’s single 32-command queue

- Enterprise NVMe SSDs deliver approximately 5.45× more sequential read bandwidth and 5.88× more random-read IOPS than enterprise SATA SSDs in controlled testing

- Real-world database and virtualized workloads show up to 8× better client-side performance with NVMe-backed storage compared to SATA configurations

- PCIe-based SSDs accounted for approximately 70.5% of the data center SSD market in 2024, reflecting widespread adoption in hyperscale and cloud infrastructure

- Modern NVMe virtualization mechanisms achieve 97-100% of near-native I/O performance for virtual machines, making NVMe VPS hosting competitive with dedicated server performance for I/O-intensive applications

- The global data center SSD market is projected to grow from USD 49.01 billion in 2025 to USD 167.64 billion by 2031, driven largely by NVMe adoption

- Singapore-based businesses benefit from NVMe VPS infrastructure that supports low-latency regional connectivity, data sovereignty requirements, and performance standards necessary for Asia-Pacific digital commerce

Table of Contents

ToggleKey Components and Concepts Behind NVMe VPS Performance

NVMe vs SATA Storage Protocols: Why Architecture Matters

The fundamental difference between NVMe and SATA storage protocols lies in how they manage communication between storage devices and the server’s processor. SATA was designed for spinning hard drives and uses a serial interface that processes storage commands through a single queue holding up to 32 commands at once. This architecture creates a bottleneck when multiple applications or users simultaneously request data, forcing commands to wait in line regardless of available CPU resources.

NVMe was purpose-built for solid-state storage and operates through the PCIe bus, which connects directly to the CPU via dedicated lanes. According to IBM’s technical documentation, NVMe SSDs use the PCIe bus natively rather than the older SATA bus, allowing much higher bandwidth and lower latency. This direct connection eliminates intermediate controller layers that add microseconds of delay to each transaction. More importantly, NVMe supports massively parallel I/O operations by enabling up to 65,535 independent queues, each capable of holding 65,536 commands. When you consider that phoenixNAP reports this queue architecture compared to SATA’s single 32-command queue, the implications for VPS hosting performance become clear: dozens of virtual machines can issue storage requests simultaneously without waiting for a shared queue to clear.

The simplified I/O stack in NVMe reduces software overhead by removing unnecessary protocol translation steps. When an application requests data, SATA must translate that request through legacy AHCI (Advanced Host Controller Interface) protocols originally designed for mechanical drives, then route it through the SATA controller before reaching the storage media. NVMe eliminates this translation layer, allowing commands to flow directly from application to storage with minimal CPU intervention. This architectural efficiency means storage devices can deliver I/O performance much closer to the raw capabilities of the underlying NAND flash memory.

Understanding IOPS and Queue Depth in VPS Performance

Input/output operations per second (IOPS) measures how many discrete read or write operations storage can complete within one second, serving as the primary metric for evaluating storage responsiveness under workload pressure. High IOPS capacity becomes critical when your VPS hosts database queries, serves dynamic web pages, or processes API requests that each trigger multiple small file operations. A MySQL database performing thousands of row lookups per second, or a WordPress installation generating pages from distributed cache files, both depend on storage that can handle many small I/O requests concurrently.

Queue depth directly influences IOPS by determining how many pending I/O requests the storage system can track and optimize at once. When queue depth is shallow, the storage controller must wait for each operation to complete before starting the next one, leaving CPU cycles and bandwidth unused. Deep queues allow the controller to reorder operations for efficiency, combining nearby reads or writes and minimizing seek time even on SSDs where data placement affects access speed at the chip level. NVMe’s support for 65,535 queues means each virtual machine, application, or process can maintain its own dedicated queue without competing for command slots, enabling true parallelism that scales with workload complexity.

Enterprise workloads demonstrate why queue depth matters in practice. During peak traffic periods, an e-commerce platform might simultaneously process payment transactions, update inventory databases, serve product images, and log user analytics. Each of these operations generates storage I/O requests. With shallow SATA queuing, these requests serialize and create latency spikes that users experience as slow checkout processes or delayed page rendering. NVMe’s deep parallel queuing processes all requests simultaneously, maintaining consistent response times even as concurrent user count increases. This performance characteristic transforms how high-traffic websites scale during demand surges.

Role of PCIe Lanes in NVMe VPS Hosting Speed

PCIe lanes function as dedicated data highways between the storage device and CPU, with each lane providing bidirectional bandwidth for transferring data packets. Modern NVMe drives typically connect using four PCIe lanes (designated as x4), while high-performance enterprise drives may use eight or sixteen lanes. The number of lanes directly determines maximum theoretical bandwidth: PCIe 3.0 x4 provides approximately 3.94 GB/s, while PCIe 4.0 x4 doubles that to roughly 7.88 GB/s. This bandwidth ceiling defines how quickly large sequential operations like database backups, log file writes, or virtual machine snapshots can complete.

In virtualization environments, PCIe lane allocation becomes more complex because the physical server’s total lane count must be distributed across multiple NVMe drives, network interfaces, and other PCIe devices. A server with 64 PCIe lanes might allocate 16 lanes to network cards, 32 lanes to four NVMe drives (8 lanes each), and the remaining lanes to management controllers. Proper lane distribution ensures that no single resource becomes a bottleneck when multiple VMs simultaneously perform storage-intensive operations. When hosting providers architect their infrastructure to support NVMe VPS hosting, they must balance lane allocation to prevent contention that would undermine NVMe’s performance advantages.

The data transfer rate enabled by sufficient PCIe lanes supports workloads that legacy SATA infrastructure simply cannot accommodate. Consider a scenario where three VMs on the same physical host simultaneously run full-text search indexing, process uploaded video files, and compile large codebases. If these VMs share a SATA SSD pool limited to approximately 600 MB/s total throughput, each VM receives roughly 200 MB/s, creating significant delays. The same workload distributed across NVMe drives with adequate PCIe lanes can provide each VM with multi-gigabyte-per-second bandwidth, completing tasks in a fraction of the time while maintaining low latency for other operations.

Impact on Real-World Workloads: Databases, APIs, and CMS Platforms

Database workloads demonstrate NVMe’s performance advantages most clearly because they combine random read patterns, mixed read-write operations, and strict latency requirements. MySQL and PostgreSQL databases serving production applications typically perform thousands of small random reads per second as they retrieve scattered table rows to satisfy query joins and index lookups. SATA SSDs struggle with this access pattern because each random read must wait its turn in the single command queue, and the protocol overhead adds latency to every operation. NVMe handles these random reads through parallel queues that process multiple requests simultaneously, reducing query execution time and enabling higher transaction throughput per virtual CPU core.

REST APIs that power mobile applications and microservices architectures generate storage loads characterized by unpredictable request timing and variable payload sizes. When an API endpoint receives a burst of simultaneous requests, it must quickly read configuration data, validate credentials against database records, fetch requested resources, and log the transactions. This workflow creates dozens of small I/O operations per API call. The storage system must handle these operations with consistent low latency to meet API response time SLAs, typically measured in milliseconds. NVMe’s ability to process these mixed workloads without queuing delays means APIs hosted on NVMe VPS infrastructure can serve more requests per second while maintaining predictable response times.

WordPress and other CMS platforms combine database queries, file system operations for themes and plugins, and caching mechanisms that all depend on storage performance. When WordPress generates a complex page, it might execute 50 database queries, read 30 PHP files, check several cache files, and write session data. Each component adds latency, and the cumulative effect determines page generation time before any network transmission occurs. Hosting WordPress on NVMe VPS infrastructure reduces storage latency for each of these operations, directly improving Time to First Byte (TTFB) metrics that influence both user experience and search engine rankings. Sites experiencing high concurrency benefit even more because NVMe maintains low latency as simultaneous page generation requests increase.

Real Performance Impact for Businesses in Singapore

Singapore SMBs operating digital commerce platforms experience the performance benefits of NVMe VPS hosting most directly during regional peak traffic hours and promotional events. When a local retailer launches a flash sale targeting customers across Southeast Asia, the website simultaneously handles product browsing, inventory checks, payment processing, and order confirmation workflows. Each customer interaction generates database queries and file operations that must complete within milliseconds to prevent cart abandonment. Singapore’s strategic position as a regional connectivity hub means hosting infrastructure here serves customers across Indonesia, Malaysia, Thailand, and the Philippines with sub-50ms latency, but only if the underlying storage can keep pace with request volume.

Data sovereignty requirements and compliance frameworks in Singapore create additional performance considerations that NVMe infrastructure addresses. Financial services, healthcare providers, and government contractors must often keep data within Singapore’s jurisdiction while maintaining performance standards equivalent to global cloud platforms. NVMe VPS hosting enables these organizations to achieve both objectives: data remains in Singapore data centers while storage performance supports demanding applications. When audit logs, transaction records, and customer data must be written to compliant storage with verified persistence, NVMe’s high write IOPS and low latency ensure that compliance overhead doesn’t degrade application responsiveness.

Singapore’s digital infrastructure continues evolving toward cloud-native architectures and containerized workloads that amplify the benefits of high-performance storage. Microservices deployments running dozens of containers on each VPS instance generate distributed I/O patterns as each container maintains its own logs, temporary files, and state information. The cumulative I/O demand from 20 containers sharing a VPS can easily exceed what SATA infrastructure was designed to handle, causing performance degradation as container density increases. NVMe’s parallel queuing architecture scales naturally with container count, enabling Singapore businesses to maximize VPS resource utilization without hitting storage bottlenecks that would otherwise require expensive dedicated server infrastructure.

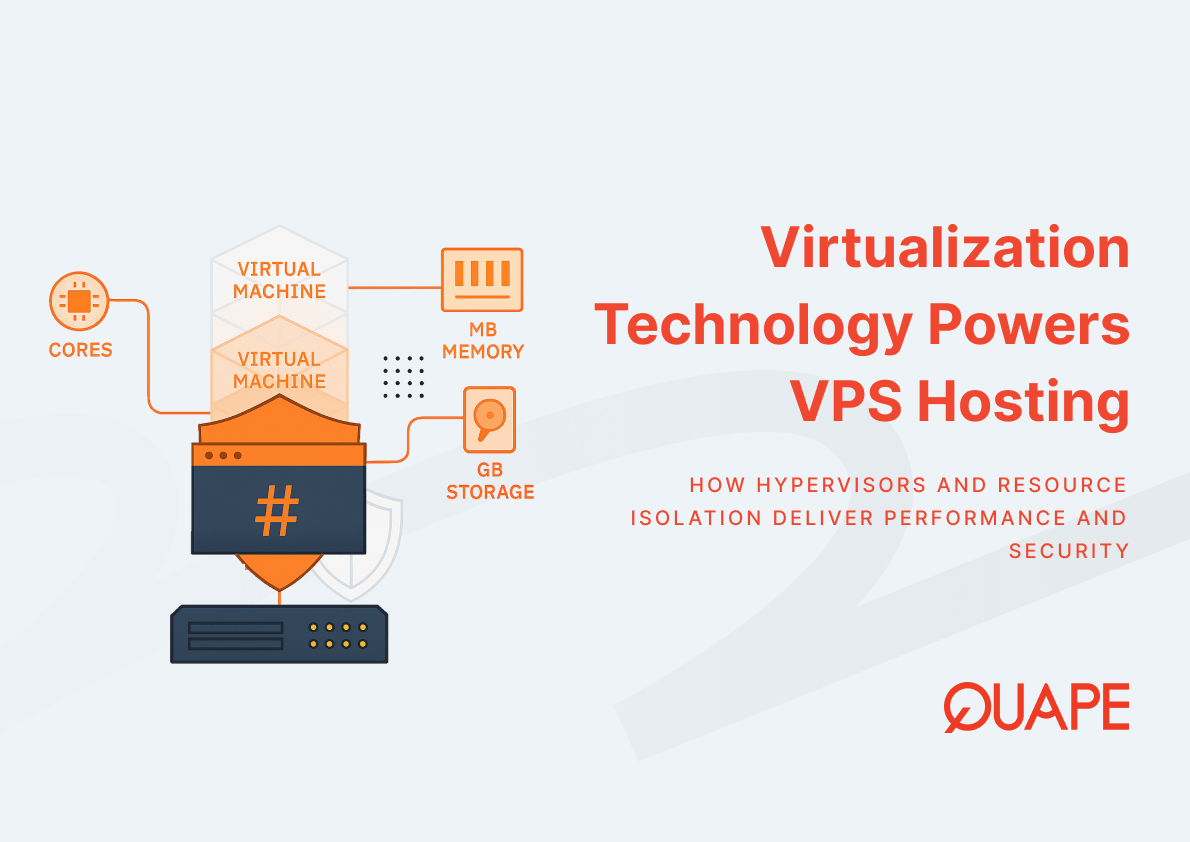

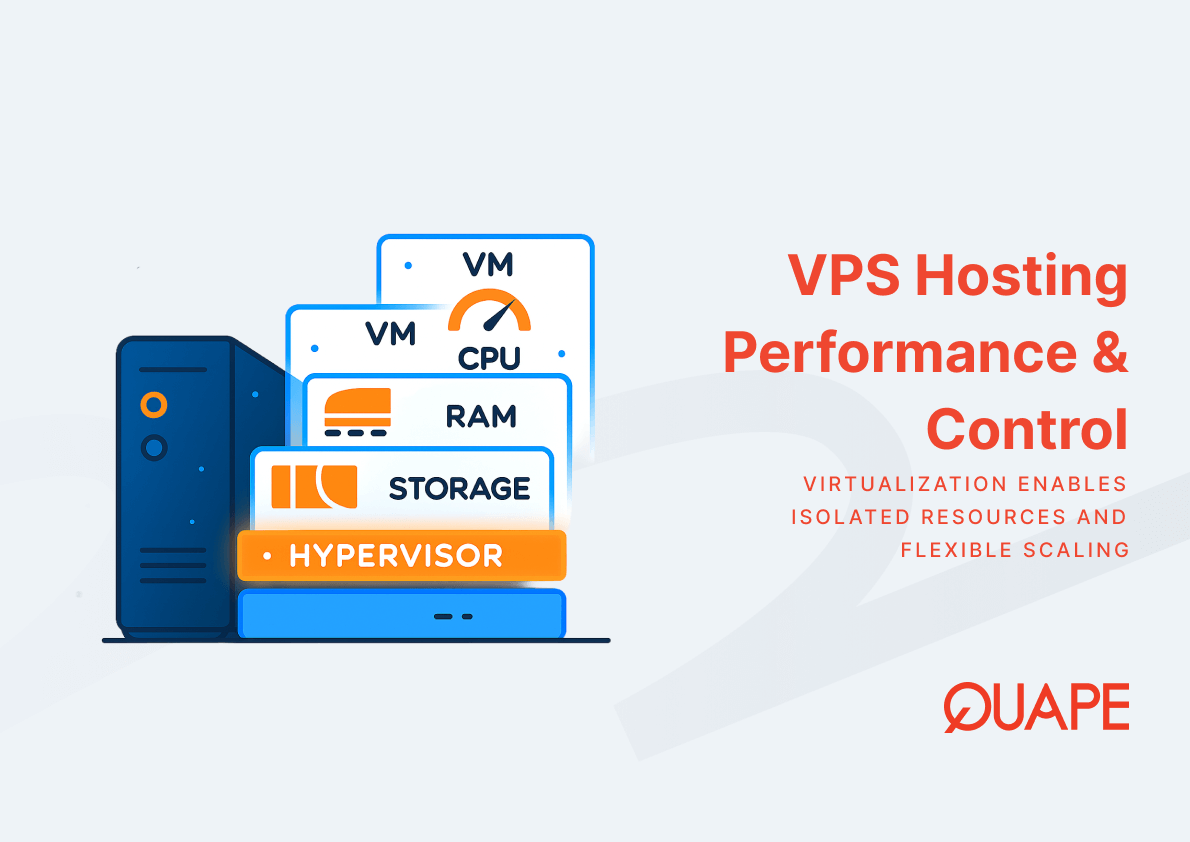

How VPS Hosting Supports and Enhances NVMe Performance Benefits

VPS hosting architecture built on KVM virtualization technology preserves NVMe performance characteristics by enabling direct device assignment and optimized I/O paths between virtual machines and physical storage. Traditional virtualization layers historically added significant overhead by intercepting every storage operation, translating it through hypervisor code, and then forwarding it to hardware. Modern approaches used in contemporary virtualization technology minimize this overhead through paravirtualization drivers and direct memory access paths that let VMs communicate with NVMe devices using optimized code paths. Research demonstrates that software-based NVMe virtualization mechanisms now achieve 97-100% of near-native I/O performance, meaning VPS instances access NVMe storage with minimal performance penalty compared to bare-metal servers.

Resource isolation in VPS environments ensures that the performance benefits of NVMe storage remain consistent across tenant workloads, preventing the “noisy neighbor” problems that plague shared hosting. When each VPS receives dedicated allocation of CPU cores, memory, and IOPS capacity, one customer’s database backup operation cannot monopolize the NVMe device’s command queues and starve other VMs of I/O resources. Proper resource isolation requires both hypervisor-level controls and storage-layer quality of service mechanisms that guarantee minimum IOPS and bandwidth to each VM. This isolation transforms NVMe’s raw performance potential into reliable, predictable performance that businesses can depend on for production workloads.

Scalability in NVMe-backed VPS hosting operates differently than in legacy storage environments because performance scales more linearly with resource allocation. When you upgrade from a 2-core VPS to a 4-core VPS on SATA storage, you might not experience proportional performance gains because storage becomes the bottleneck as CPU capacity increases. On NVMe infrastructure, adding CPU cores and memory typically yields corresponding performance improvements because the storage layer can handle the increased I/O demand. This scalability characteristic makes capacity planning more predictable: you can reasonably expect that doubling VPS resources will roughly double application throughput, assuming your workload is properly architected. For businesses evaluating VPS hosting options, this linear scaling reduces the risk of outgrowing your infrastructure tier and simplifies forecasting hosting costs as traffic grows.

Conclusion

NVMe VPS hosting represents a fundamental infrastructure upgrade that enables Singapore businesses to support modern workload demands while controlling costs and maintaining competitive performance standards. The architectural advantages of PCIe connectivity, parallel command queuing, and reduced protocol overhead translate directly into faster database queries, more responsive APIs, and improved user experiences across digital properties. As data center SSD markets shift decisively toward NVMe dominance and workload intensity continues increasing, selecting hosting infrastructure built on NVMe storage positions your business to handle current requirements and accommodate future growth without disruptive migrations.

Contact our sales team to discuss how NVMe VPS hosting can support your specific performance requirements and business objectives.

Frequently Asked Questions

What makes NVMe VPS hosting faster than SATA-based VPS?

NVMe connects directly to the CPU via PCIe lanes instead of going through the SATA controller interface, eliminating protocol translation overhead and bandwidth limitations. NVMe also supports up to 65,535 parallel I/O queues compared to SATA’s single 32-command queue, allowing many operations to process simultaneously rather than waiting in line.

How much performance improvement can I expect from NVMe VPS hosting?

Enterprise testing shows NVMe SSDs deliver approximately 5.45× more sequential read bandwidth and 5.88× more random-read IOPS than enterprise SATA SSDs. Real-world database and virtualized workloads often show up to 8× better performance, though actual gains depend on your specific application characteristics and workload patterns.

Does NVMe VPS hosting cost significantly more than SATA-based options?

While NVMe infrastructure requires higher initial investment for PCIe-compatible hardware, economies of scale in the rapidly growing data center SSD market have narrowed the price gap. The performance-per-dollar advantage often justifies any premium because you can serve more users or transactions per VPS instance, reducing the total number of servers needed.

Will my application benefit from NVMe VPS hosting?

Applications that perform frequent database queries, handle high concurrent user loads, or process many small file operations benefit most from NVMe’s low latency and high IOPS. E-commerce platforms, API servers, database-driven CMS installations, and real-time analytics workloads typically see substantial performance improvements from NVMe storage.

How does virtualization affect NVMe performance?

Modern virtualization platforms using KVM with optimized I/O paths achieve 97-100% of bare-metal NVMe performance for virtual machines. Proper resource isolation ensures each VPS maintains consistent access to NVMe performance regardless of what other VMs on the same physical host are doing.

Is NVMe VPS hosting suitable for WordPress websites?

WordPress sites that handle significant traffic or run resource-intensive plugins benefit from NVMe’s ability to quickly process the dozens of database queries and file reads required to generate each page. The reduced storage latency directly improves Time to First Byte (TTFB), which affects both user experience and search rankings.

What happens to NVMe performance as I scale my VPS resources?

NVMe performance scales more linearly with resource allocation than SATA storage, meaning adding CPU cores and memory typically yields proportional performance gains. This predictable scaling simplifies capacity planning and reduces the risk of hitting performance ceilings as your application grows.

Can NVMe VPS hosting handle sudden traffic spikes?

NVMe’s parallel queuing architecture excels at handling burst workloads because it processes many simultaneous I/O requests without significant latency increases. This capability makes NVMe VPS hosting particularly effective for e-commerce flash sales, content launches, or applications with unpredictable traffic patterns.

- VPS Network Performance & Latency Optimization - December 12, 2025

- How to Choose RAM & CPU for Your VPS - December 12, 2025

- NVMe VPS Hosting: Why Ultra-Fast Storage Makes a Massive Performance Difference - December 11, 2025